Deployment Overview#

This page covers general information about deploying Dagster. For guides on specific platforms, see Deployment Guides.

Architecture#

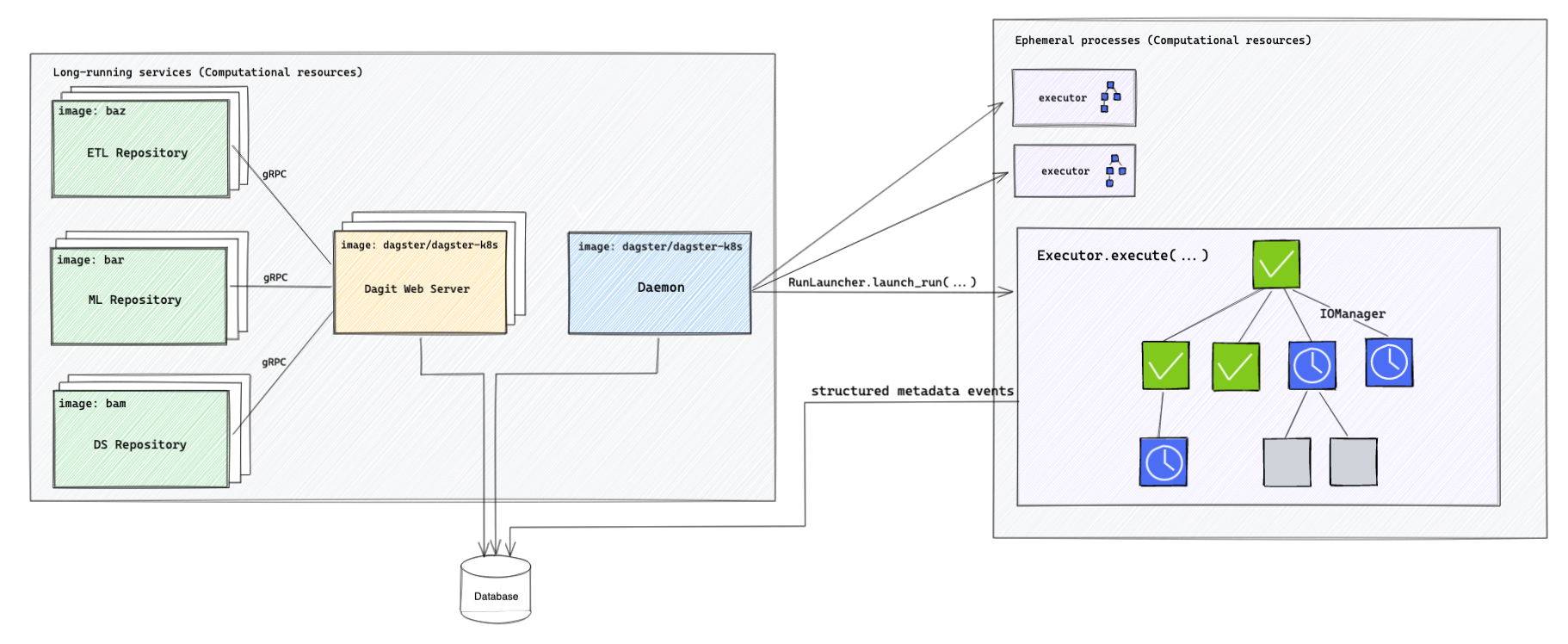

This diagram shows a generic Dagster deployment. The components of the deployment are described below.

Long-running services#

Dagster requires three long-running services. You can just run Dagit and the Dagster Daemon for simple deployments, and Dagit will manage running User Repository Deployment services for you. For larger deployments, the User Repository Deployment services can be run and managed separately.

| Service | Description | Required |

|---|---|---|

| Dagit | Dagit serves the user interface and responds to GraphQL queries. It can have 1 or more replicas. | Yes |

| Dagster Daemon | The Dagster Daemon operates schedules, sensors, and run queuing. Currently, replicas are not supported. | Required for schedules, sensors, and run queuing |

| User Repository Deployments | User Repository Deployments serve metadata about user pipelines. You can have many repository servers, and each server can have 1 or more replicas. | Recommended. Otherwise, Dagit and the Daemon must have direct access to user code. |

Configuring Dagster Deployments#

Dagster deployments are composed of multiple components, such as storages, executors, and run launchers. One of the core features of Dagster is that each of these components is swappable and configurable.

If you don't provide any custom configuration, Dagster automatically uses a default implementation of each component. However, you can swap out the default implementation for another Dagster-provided one or even write a custom implementation. For example, Dagster uses the SqliteRunStorage by default to store information about pipeline runs. You can swap it out with the Dagster-provided PostgresRunStorage instead or even write a MongoRunStorage.

Based on the component's scope, the component is configured either at the Dagster Instance or Pipeline Run level. Access to user code is configured at the Workspace level. The table below describes how to configure components at each of these levels.

Dagster provides a few vertically-integrated deployment options that abstract away some of the configuration options described below. For example, with Dagster's provided Kubernetes Deployment, configuration is defined through Helm values, and the Kubernetes deployment automatically generates Dagster Instance and Workspace configuration.

| Level | Configuration | Description |

|---|---|---|

| Dagster Instance | dagster.yaml | The Dagster Instance is responsible for managing all deployment-wide components, such as the database. You can specify the configuration for instance-level components in dagster.yaml. |

| Workspace | workspace.yaml | The workspace defines how to access and load your code. You can define workspace configuration using workspace.yaml. |

| Pipeline Run | run config | A pipeline run is responsible for managing all pipeline-scoped components, such as the executor, solids, and resources. These components dictate pipeline behavior, such as how to execute solids or where to store outputs. You can specify configuration for run-level components using the pipeline run's "run config." Run config is either defined in code in the Dagit playground |

Pipeline Execution Flow#

Pipeline execution flows through several parts of the system. The components in the table below handle execution in the order they are listed. This table describes runs launched by Dagit. Runs launched by schedules and sensors go through the same flow, but the first step is called by the Dagster Daemon instead of Dagit.

In a deployment without the Dagster Daemon, Dagit calls the Run Launcher directly, skipping the Run Coordinator.

| Component | Description | Configured by |

|---|---|---|

| Run Coordinator | The Run Coordinator is a class invoked by the Dagit process when runs are launched from the Dagit UI. The run coordinator class can be configured to pass runs to the Daemon via a queue. | Instance |

| Run Launcher | The Run Launcher is a class invoked by the Daemon when it receives a run from the queue. This class initializes a new run worker to handle execution. Depending on the launcher, this could mean spinning up a new process, container, Kubernetes pod, etc. | Instance |

| Run Worker | The Run Worker is a process which traverses a pipelines's DAG and uses the Executor to execute each solid. | N/A |

| Executor | The Executor is a class invoked by the Run Worker for running user solids. Depending on the Executor, solids run in local processes, new containers, Kubernetes pods, etc. | Run Config |